Update 6/9/2010 – As philrosenstein points out in the comments below, a similar mechanism was made available in Rails 2.1 using Rails.cache. See Railscast #115 for an introduction.

When I first heard about memcached I was excited because of the promise of a very fast caching mechanism that could store anything, but was a little frightened by the idea of dipping my toes into the caching world. Isn’t caching hard? Not the actual process of storing something. Expiring from cache is a different story. I’m only going to deal with the first problem.

So, how easy is it? First, get memcached. If you’re running something like Ubuntu this is as easy as:

["\nsudo apt-get install memcached\n"]

Of if you have Macports on your Mac then:

["\nsudo port install memcached\n"]

Once you have memcache you’ll want to start it running:

The -vv puts memcache in Very Verbose mode so you get to see all the action. You’ll run this as a daemon once you’re ready to go for real (replace -vv with -d).

My example below uses Ruby and Rails but there are memcache libraries for just about every language out there . For Ruby we’re using memcache-client and you’ll need the gem:

["\nsudo gem install memcache-client\n"]

Okay, all the hard stuff is out of the way. Rails already tries to require 'memcache' so you don’t need to worry about that at all. At the end of your config/environment.rb file create an instance of memcache and assign it to a constant so it’s around whenever we need it:

["\nCACHE = MemCache.new('127.0.0.1')\n"]

Now we’ll add a simple method to our application controller so that this new caching mechanism is available to all of our controllers. Make sure this method is private:

["\nprivate\ndef data_cache(key)\n unless output = CACHE.get(key)\n output = yield\n CACHE.set(key, output, 1.hour)\n end\n return output\nend\n"]

memcache stores everything as simple key/value pairs. You either ask memcache if it has something for a given key, or give it a value along with a key to store. This method will attempt to get the value out of cache and only if it’s not found then will run the block you use when calling it (that’s next) and store the results of that block into cache with the given key and telling it to expire after 1.hour. Every time you ask for that key within the next hour you’ll get the same result from memory. After that memcache will store it again for another hour.

As a very simple example, you could use this in your controllers like so:

["\nresult = data_cache('foo') { 'Hello, world!' }\n"]

So, if the cache contains a key called ‘foo’ it will return it to result. If not, then it will store Hello, world! with the key foo and also return to result. Either way, result will end up with what you want (the contents of the block). If you take a look at the output of memcache back at the terminal you’ll see it trying to get and store data by the key.

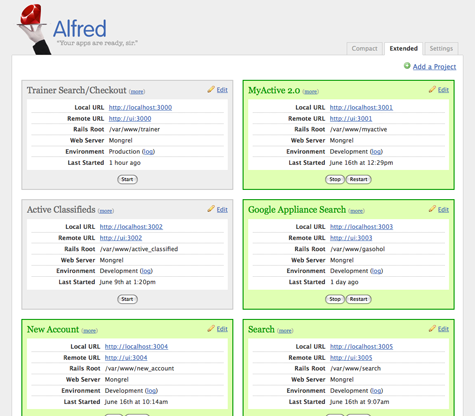

Storing a simple string doesn’t do us much good, so let’s try a real world example. At work I’m working on a new search with a Google GSA . We get some keywords and other search parameters from the user, send them over to the GSA, parse the result, and display to the user. We only update our search index once per day, so if more than one person searches for “running san diego” there’s no reason to go to the GSA each and every time—the result hasn’t changed since it was asked for the earlier in the day. So we cache the result for 24 hours.

A search result on our system can be uniquely identified by the URL that was generated from the user’s search parameters. We use this URL as the key to memcache. A regular URL can be pretty long so we take the MD5 hash of it and use that as the key:

["\nmd5 = Digest::MD5.hexdigest(request.request_uri)\noutput = data_cache(md5) { SEARCH.search(keywords, options) }\n"]

SEARCH is the library that talks to the GSA and parses the result (which I hope to open source soon) (available here). What did this do for our response times? Our GSA box is currently located in Australia (it’s a loaner). Between the network latency of talking to the GSA and receiving and parsing the huge XML file it returns (50kb), most requests were taking 1500 to 2000 milliseconds (not including going through the regular Rails stack to get the page back to the user). With memcache in place the same results come back in 1 millisecond. One. That’s three orders of magnitude difference!

As you can see, adding memcache to your Rails app is stupidly simple and you can start benefiting from it right away. Don’t be scared of caching!

Update I updated the post to use data_cache rather than cache as that’s already the name of the fragment caching method in Rails.